First, a quick “congratulations!” to my brother-in-law on graduating from Boston College’s School of Law! Law School ain’t easy, and graduating cum laude is even tougher. Well done – I salute you with a filet mignon on a flaming sword!

The graduation ceremony took place on the Boston College campus. BC has considerable “juice”, and as such, they were able to land an pretty high-up commencement speaker: Ben Bernanke, Chairman of the Board of Governors of the United States Federal Reserve (a.k.a. “The Fed”) and “Fourth Most Powerful Person in the World” according to Newsweek. It’s the first commencement ceremony I’ve ever been to that had the Secret Service (or whatever federal cops get assigned to high-ranking non-Executive Branch people) present.

There were no great revelations in Bernanke’s speech; I was hoping that he’d reveal some plan for saving the economy by decoding the hints hidden in the U.S. Constitution that would lead us to a hidden stash of gold, a la the National Treasure movies, but no such thing happened. If he has new ideas for bringing about economic recovery, he didn’t give them away – in fact, he told the business reports that they might as well go get some coffee, as he wasn’t going to cover them.

Instead, his speech has the usual platitudes and advice – you’re at the start of a great journey, be flexible. embrace change, don’t be afraid to go outside your comfort zone – modified to suit the times, including the obligatory reference to the current state of the economy, stating that it has ‘dominated my waking hours” for the past twenty-one months. He also talked about how he went from a South Carolina boy with no expectations to move far away from home to his current position, providing some biographical information which I didn’t know before. It wasn’t a bad speech – I’ve heard longer and less interesting ones at other commencements – but aside from a J.K. Rowling joke, it wasn’t anything out of the ordinary.

Instead, his speech has the usual platitudes and advice – you’re at the start of a great journey, be flexible. embrace change, don’t be afraid to go outside your comfort zone – modified to suit the times, including the obligatory reference to the current state of the economy, stating that it has ‘dominated my waking hours” for the past twenty-one months. He also talked about how he went from a South Carolina boy with no expectations to move far away from home to his current position, providing some biographical information which I didn’t know before. It wasn’t a bad speech – I’ve heard longer and less interesting ones at other commencements – but aside from a J.K. Rowling joke, it wasn’t anything out of the ordinary.

Here’s the complete transcript of his speech, which you can also find at The Fed’s Board of Governors site:

I am very pleased to have the opportunity to address the graduates of the Boston College Law School today. I realized with some chagrin that this is the third year in a row that I have given a commencement address here in the First Federal Reserve District, which is headquartered at the Federal Reserve Bank of Boston. This part of the country certainly has a remarkable number of fine universities. I will have to make it up to the other 11 Districts somehow.

Along those lines, last spring I was nearby in Cambridge, speaking at Harvard University’s Class Day. The speaker at the main event, the Harvard graduation the next day, was J. K. Rowling, author of the Harry Potter books. Before my remarks, the student who introduced me took note of the fact that the senior class had chosen as their speakers Ben Bernanke and J. K. Rowling, or, as he put it, "two of the great masters of children’s fantasy fiction." I will say that I am perfectly happy to be associated, even in such a tenuous way, with Ms. Rowling, who has done more for children’s literacy than any government program I know of.

I get a number of invitations to speak at commencements, which I find a bit puzzling. A practitioner, like me, of the dismal science of economics–and it is even more dismal than usual these days–is not usually the first choice for providing inspiration and uplift. I will do my best, though, and in that spirit I will take a more personal perspective than usual in my remarks today. The business reporters should go get coffee or something, because I am not going to say anything about the markets or monetary policy.

Instead, I’d like to offer a few thoughts today about the inherent unpredictability of our individual lives and how one might go about dealing with that reality. As an economist and policymaker, I have plenty of experience in trying to foretell the future, because policy decisions inevitably involve projections of how alternative policy choices will influence the future course of the economy. The Federal Reserve, therefore, devotes substantial resources to economic forecasting. Likewise, individual investors and businesses have strong financial incentives to try to anticipate how the economy will evolve. With so much at stake, you will not be surprised to know that, over the years, many very smart people have applied the most sophisticated statistical and modeling tools available to try to better divine the economic future. But the results, unfortunately, have more often than not been underwhelming. Like weather forecasters, economic forecasters must deal with a system that is extraordinarily complex, that is subject to random shocks, and about which our data and understanding will always be imperfect. In some ways, predicting the economy is even more difficult than forecasting the weather, because an economy is not made up of molecules whose behavior is subject to the laws of physics, but rather of human beings who are themselves thinking about the future and whose behavior may be influenced by the forecasts that they or others make. To be sure, historical relationships and regularities can help economists, as well as weather forecasters, gain some insight into the future, but these must be used with considerable caution and healthy skepticism.

In planning our own individual lives, we all have a strong psychological need to believe that we can control, or at least anticipate, much of what will happen to us. But the social and physical environments in which we live, and indeed, we ourselves, are complex systems, if you will, subject to diverse and unforeseen influences. Scientists and mathematicians have discussed the so-called butterfly effect, which holds that, in a sufficiently complex system, a small cause–the flapping of a butterfly’s wings in Brazil–might conceivably have a disproportionately large effect–a typhoon in the Pacific. All this is to put a scientific gloss on what you probably know from everyday life or from reading good literature: Life is much less predictable than we would wish. As John Lennon once said, "Life is what happens to you while you are busy making other plans."

Our lack of control over what happens to us might be grounds for an attitude of resignation or fatalism, but I would urge you to take a very different lesson. You may have limited control over the challenges and opportunities you will face, or the good fortune and trials that you will experience. You have considerably more control, however, over how well prepared and open you are, personally and professionally, to make the most of the opportunities that life provides you. Any time that you challenge yourself to undertake something worthwhile but difficult, a little out of your comfort zone–or any time that you put yourself in a position that challenges your preconceived sense of your own limits–you increase your capacity to make the most of the unexpected opportunities with which you will inevitably be presented. Or, to borrow another aphorism, this one from Louis Pasteur: "Chance favors the prepared mind."

When I look back at my own life, at least from one perspective, I see a sequence of accidents and unforeseeable events. I grew up in a small town in South Carolina and went to the public schools there. My father and my uncle were the town pharmacists, and my mother, who had been a teacher, worked part-time in the store. I was a good student in high school and expected to go to college, but I didn’t see myself going very far from home, and I had little notion of what I wanted to do in the future.

Chance intervened, however, as it so often does. I had a slightly older friend named Ken Manning, whom I knew because his family shopped regularly at our drugstore. Ken’s story is quite interesting, and a bit improbable, in itself. An African American, raised in a small Southern town during the days of racial segregation, Ken nevertheless found his way to Harvard for both a B.A. and a Ph.D., and he is now a professor at MIT, not too far from here. Needless to say, he is an exceptional individual, in his character and determination as well as his remarkable intellectual gifts.

Anyway, for reasons that have never been entirely clear to me, Ken made it his personal mission to get me to come to Harvard also. I had never even considered such a possibility–where was Harvard, exactly? Up North, I thought–but Ken’s example and arguments were persuasive, and I was (finally) persuaded. Fortunately, I got in. It probably helped that Harvard was not at the time getting lots of applications from South Carolina.

We all have moments we will never forget. One of mine occurred when I entered Harvard Yard for the first time, a 17-year-old freshman. It was late on Saturday night, I had had a grueling trip, and as I entered the Yard, I put down my two suitcases with a thump. I looked around at the historic old brick buildings, covered with ivy. Parties were going on, students were calling to each other across the Yard, stereos were blasting out of dorm windows. I took in the scene, so foreign to my experience, and I said to myself, "What have I done?"

At some level, I really had no idea what I had done, or what the consequences would be. All I knew was that I had chosen to abandon the known and comfortable for the unknown and challenging. But for me, at least, the expansion of horizons was exactly what I needed at that time in my life. I suspect that, for many of you, matriculation at the Boston College law school represented something similar–a leap into the unknown and new, with consequences and opportunities that you could hardly have guessed in advance. But, in some important ways, leaving the known and comfortable was exactly the point of the exercise. Each of you is a different person than you were three years ago, not only more knowledgeable in the law, but also possessing a greater understanding of who you are–your weaknesses and strengths, your goals and aspirations. You will be learning more about the fundamental question of who you really are for the rest of your life.

After I arrived at college, unpredictable factors continued to shape my future. In college I chose to major in economics as a compromise between math and English, and because a senior economics professor liked a paper I wrote and offered me a summer job. In graduate school at MIT, I became interested in monetary and financial history when a professor gave me several books to read on the subject. I found historical accounts of financial crises particularly fascinating. I determined that I would learn more about the causes of financial crises, their effects on economic performance, and methods of addressing them. Little did I realize then how relevant that subject would become one day. Later I met my wife Anna, to whom I have been married now for 31 years, on a blind date.

After finishing graduate school, I began a career as an economics professor and researcher. I pursued my interests from graduate school by delving deeply into the causes of the Great Depression of the 1930s, along with many other topics in macroeconomics, monetary policy, and finance. During my time as a professor, I tried to resist the powerful forces pushing scholars to greater and greater specialization and instead did my best to keep as broad a perspective as possible. I read outside my field. I did empirical research, studied history, wrote theoretical papers, and established connections, usually in a research or advisory role, with the Fed and other central banks.

In the spring of 2002, I was asked by the Administration whether I might be interested in being appointed to the Federal Reserve’s Board of Governors. I was not at all sure that I wanted to take the time from teaching and research. But this was soon after 9/11, and I felt keenly that I owed my country my service. Moreover, I told myself, the experience would be useful for my research when I returned to my post at Princeton. I decided to take a two-year leave to go to Washington. Well, once again, so much for foresight. I have now been in Washington nearly seven years, serving first as a Fed governor, then chairman of the President’s Council of Economic Advisers. In the fall of 2005, President Bush appointed me to be Chairman of the Fed, effective with the retirement of Alan Greenspan at the end of January 2006.

You will not be surprised to hear that events since January 2006 have not been precisely as I anticipated, either. My colleague, Bank of England Governor Mervyn King, has said that the object of central banks should be to make monetary policy as boring as possible. Unfortunately, by that metric we have not been successful. The financial crisis that began in August 2007 is the most severe since the Great Depression, and it has been the principal cause of the global recession that began last fall. Battling that crisis and trying to mitigate its effect on the U.S. and global economies has dominated my waking hours now for some 21 months. My colleagues at the Fed and I have been called on to take many tough decisions, including adopting extraordinary and unprecedented policy measures to address the crisis.

I think you will agree that the chain of events that began with my decision to go far from home for college and has culminated–so far–with the role I am playing today in U.S. economic policymaking is so unlikely that we could have safely ruled it out of consideration. Nevertheless, of course, it happened. Although I never could have prepared in advance for the specific events of the past 21 months, I believe that my efforts throughout my life to expand my horizons and to keep a broad perspective–for example, to study and write about economic and financial history, as well as more conventional topics in macroeconomics and monetary economics–have helped me better meet the challenges that have come my way. At the same time, because I appreciate the role of chance and contingency in human events, I try to be appropriately realistic about my own capabilities. I know there is much that I don’t know. I consequently try to be attentive to all points of view, to work collaboratively, and to involve as many smart people in policy decisions as possible. Fortunately, my colleagues and the staff at the Federal Reserve are outstanding. And indeed, many of them have demonstrated their own breadth and flexibility, moving well beyond their previous training and experience to tackle a wide range of novel and daunting issues, usually with great success.

Law is like economics in that, although it has its own esoterica known only to initiates, it is at bottom a craft whose value lies primarily in its practical application. You cannot know today what problems or challenges you will face in the course of your professional lives. Thus, I hope that, even as you continue to acquire expertise in specific and sometimes narrow aspects of the law, you will continue to maintain a broad perspective and willingness, indeed an eagerness, to expand the range of your knowledge and experience.

I have spoken a bit about the economic and financial challenges that we face. How do these challenges bear on the prospects of the graduates of 2009? The economic situation is a trying one, as you know. We are in a recession, and the labor market is weak. Many of you may not have gotten the job you wanted; some may have had offers rescinded or the start of employment delayed. I do not minimize those constraints and disappointments in any way. Restoring economic prosperity and maximizing economic opportunity are the central focus of our efforts at the Fed.

Nevertheless, you are in some ways very lucky. You have been trained in a field, law, that is exceptionally broad in its compass. At the Federal Reserve, lawyers are involved in every aspect of our policies and operations–not just because they know the legal niceties, but because they possess analytical tools that bear on almost any problem. In law school you have honed your skills in reasoning, reading, and writing. Many of you have work experience or bring backgrounds to bear ranging from history to political science to the humanities to science. There will always be a need for people with your abilities and talents.

So, my advice to you is to stay optimistic. Things usually have a way of working out. My second piece of advice is to be flexible, even adventurous as you begin your careers. As I have tried to illustrate today, you are much less able than you think to foresee how your life, both professional and personal, will play out. The world changes too fast, and too many accidents and unpredictable events occur. It will pay, therefore, to be creative and open-minded as you search for and consider professional opportunities. Look most carefully at those options that will give you a chance to learn new things, explore new areas, and grow as a person. Think of every job as a potential investment in yourself. Will it prepare your mind for the opportunities that chance will provide?

You are lucky also to be living and studying in the United States. There is a lot of pessimistic talk now about the future of America’s economy and its role in the world. Such talk accompanies every period of economic weakness. The United States endured a decade-long Great Depression and returned to prosperity and global leadership. When I graduated from college in 1975, and from graduate school in 1979, the economy was sputtering, gas prices and inflation were high, and pessimism–malaise, President Carter called it–was rampant. The U.S. economy subsequently entered more than two decades of growth and prosperity. The economy will recover–it has too many fundamental strengths to be kept down for too long–and the mood will brighten.

This is not to ignore real challenges. Our society is aging, implying higher health-care costs and fiscal burdens. We need to save more as a country, to reduce global imbalances in saving and investment, and to set the stage for continued growth. Our educational system is strong in some areas, including our university system, but does not serve everyone equally well, contributing to slower growth and greater income disparities. In the diverse capacities for which your training has prepared you, many of you will play a vital role in addressing these problems, both in the public and private spheres.

I conclude with congratulations to the graduates, your families, and friends. You have worked hard and accomplished much. You have a great deal to look forward to, as many interesting and gratifying opportunities await you. I hope that as you enter or re-enter the working world, you make sure to stay flexible and open-minded and to learn whenever you can. That’s the best way to deal with the unpredictabilities that are inherent in life. I wish you the best of luck, with the proviso that luck is what you make of it.

And perhaps you will advise next year’s class to invite J. K. Rowling.

Monarch Burger went to the trouble of making their apple pie look like a slice of homemade apple pie. While it seems appealing in its photo on the menu, it sets up a false expectation. It may look like a slice of homemade apple pie, but it certainly doesn’t taste like one. Naturally, it flopped. Fast-food restaurants are set up to be run not by trained chefs, but by a low-wage, low-skill, disinterested staff. As a result, their food preparation procedures are designed to run on little thinking and no passion. They’re not set up to create delicious homemade apple pies.

Monarch Burger went to the trouble of making their apple pie look like a slice of homemade apple pie. While it seems appealing in its photo on the menu, it sets up a false expectation. It may look like a slice of homemade apple pie, but it certainly doesn’t taste like one. Naturally, it flopped. Fast-food restaurants are set up to be run not by trained chefs, but by a low-wage, low-skill, disinterested staff. As a result, their food preparation procedures are designed to run on little thinking and no passion. They’re not set up to create delicious homemade apple pies.

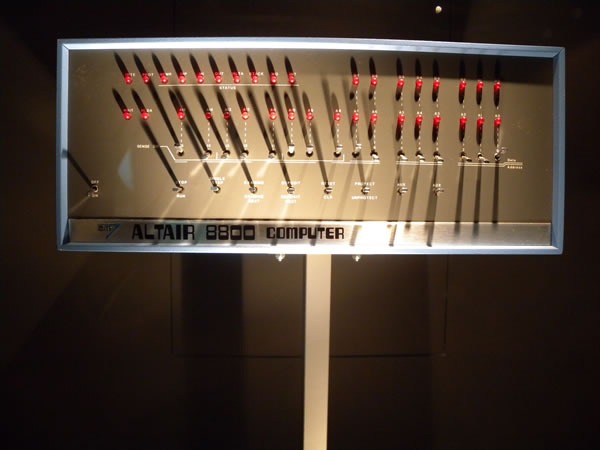

![80s-era computers: Apple ][, Commodore 64, TRS-80 and IBM PC 80s-era computers: Apple ][, Commodore 64, TRS-80 and IBM PC](https://www.joeydevilla.com/wordpress/wp-content/uploads/2009/05/80s-computers.jpg)

Instead, his speech has the usual platitudes and advice – you’re at the start of a great journey, be flexible. embrace change, don’t be afraid to go outside your comfort zone – modified to suit the times, including the obligatory reference to the current state of the economy, stating that it has ‘dominated my waking hours” for the past twenty-one months. He also talked about how he went from a South Carolina boy with no expectations to move far away from home to his current position, providing some biographical information which I didn’t know before. It wasn’t a bad speech – I’ve heard longer and less interesting ones at other commencements – but aside from a J.K. Rowling joke, it wasn’t anything out of the ordinary.

Instead, his speech has the usual platitudes and advice – you’re at the start of a great journey, be flexible. embrace change, don’t be afraid to go outside your comfort zone – modified to suit the times, including the obligatory reference to the current state of the economy, stating that it has ‘dominated my waking hours” for the past twenty-one months. He also talked about how he went from a South Carolina boy with no expectations to move far away from home to his current position, providing some biographical information which I didn’t know before. It wasn’t a bad speech – I’ve heard longer and less interesting ones at other commencements – but aside from a J.K. Rowling joke, it wasn’t anything out of the ordinary.